GSOC2022: Deepchem - Pytorch Lightning

Over the summer of 2022 I worked on the project of integrating Pytorch Lightning with the Deepchem library. Pytorch Lightning provides a framework and functionalities for building and training pytorch models. Specifically, the pytorch-lightning functionalities that we leveraged for the project were:

- Multi-GPU training for the current set of deepchem models. Using this functionality deepchem users can train their models using multiple GPUs. Prior to this Deepchem supported only a single GPU training.

- Hydra config management to track model configuration and experiments easily with lightning integration.

Deepchem - Pytorch Lightning Integration Architecture

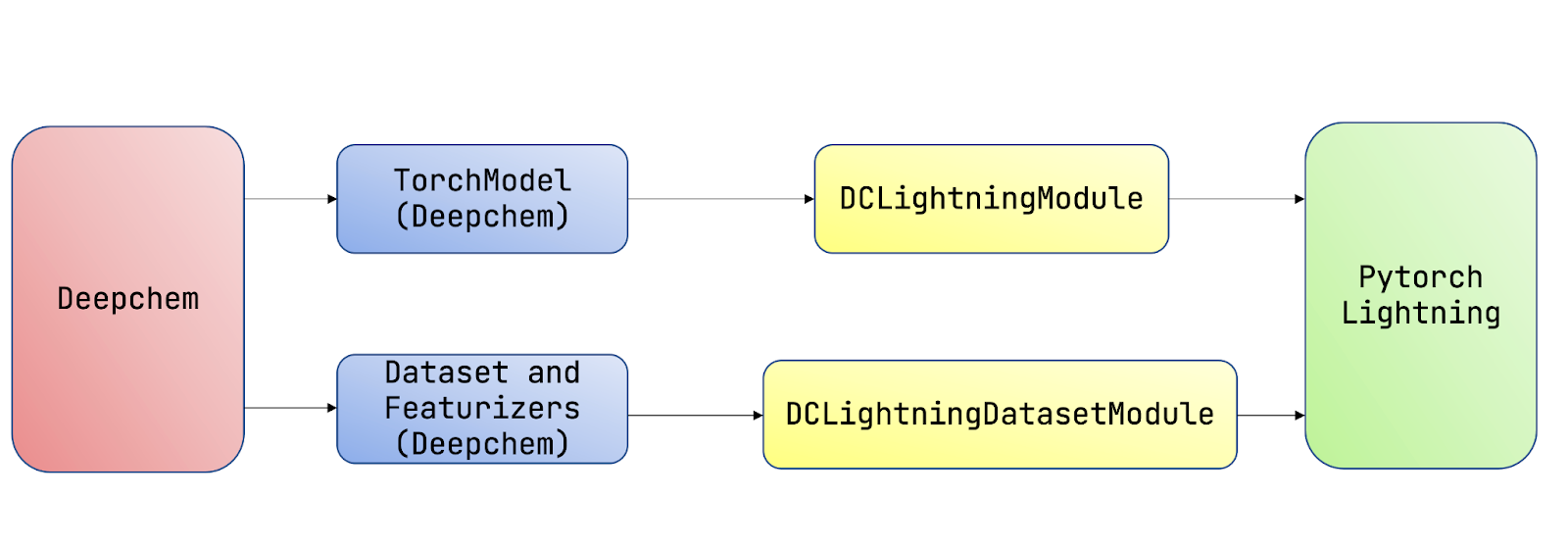

The interfacing between the two libraries was done using the DCLightningModule and DCLightningDatasetModule. The DCLightningModule inherits from the pytorch-lightning LightningModule and defines the training, validation and test steps for the model. On the other hand, the DCLightningDatasetModule defines how the data is prepared and fed into the model so that it can be used for training.

Fig-1: Interfacing architecture for Deepchem and PytorchLightning.

Results

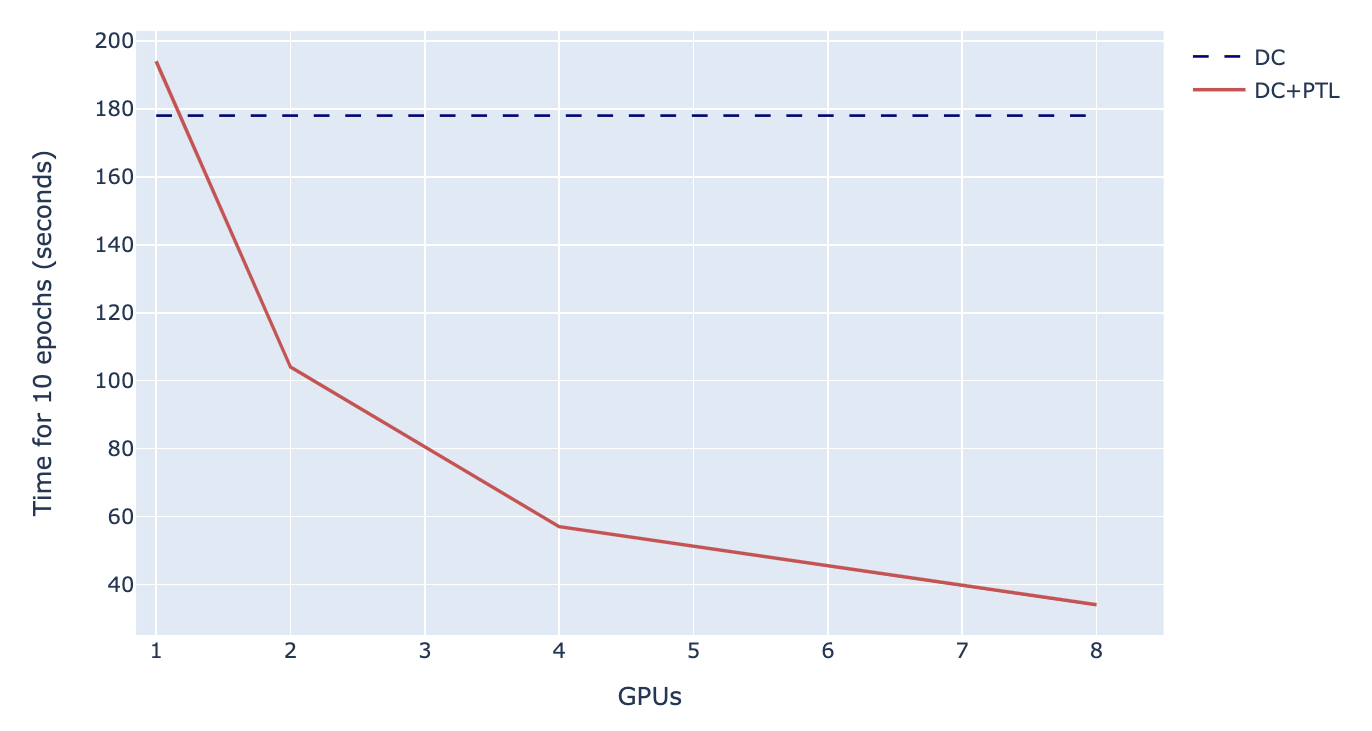

As a result of this project we established modules and pipelines using which deepchem users can easily integrate their models into the pytorch lightning framework and can leverage the functionalities offered by lightning. Details of how this can be done will be discussed in the next section. We also benchmarked the integration using a graph convolution model trained on the Zinc15 dataset and the results of the speedup are reported in Fig-2.

Fig-2: Benchmarking performance of a Graph Convolution model trained on Zinc15 dataset using Deepchem + Pytorch-Lightning (DC+PTL) and Deepchem (DC). We experiment with 1, 2, 4, 8 GPUs for benchmarking the training speed. Deepchem natively supports only 1 GPU training and for comparison, we extrapolate the speed seen with Deepchem across the different variations of GPUs.

We see a speedup of 5.2x using 8GPUs with Deepchem Pytorch-Lightning compared to just using Deepchem on 1 GPU. The speedup is not linear in terms of the number of GPUs because there is an overhead of communication when using multiple GPUs.

This project also enabled Hydra experimentation framework usage for deepchem models which is documented in the #3030.

Using the Deepchem - Pytorch-Lightning Integration

The benchmarking PR (#3016) is a good starting point to use pytorch-lightning for deepchem models. We have implemented the integration in a way to support all the deepchem modules and datasets, if your model is not supported that would require making changes to the DCLightningModule and DCLightningDatasetModule.

Pull Requests (PRs) and Documentation

Below is a list of all the pull-requests we implemented in relation to the summer project.

- #2806: GPU installation fix to enable experimentation on GPUs.

- #2826: Initial PR prototyping a deepchem-pytorch-lightning integration in a notebook.

- #2945: DCLightningModule implementation.

- #2958: GCN (Graph Convolution Network) usage with DCLightningModule.

- #2993: DCLightningDatasetModule implementation.

- #2994: DCLightningDatasetModule tests.

- #3016: Benchmarking script for GPUs.

- #3042: Update pytorch-lightning version.

- #3030: Hydra example using Deepchem + Pytorch-Lightning.

- #3035: Dataloader changes to enable multi-GPU training.

Progress of the project is also summarised in the following resources: