Hi all,

I’m having some difficulty saving weights during training of my GraphConvModel and would love any advice. I’ve tested out a few different scenarios.

- Running my models in a .ipynb notebook, fitting the model, and saving the checkpoints works as expected. The checkpoints are saved in the specified model directory.

- Running my models in .py files also save the checkpoints, however as soon as the python script is completed the checkpoints disappear.

I’ve tried messing around with parameters including the number of checkpoints to keep (passing in nothing or a random number), however I am unable to keep the checkpoints throughout training with my python scripts. Any thoughts?

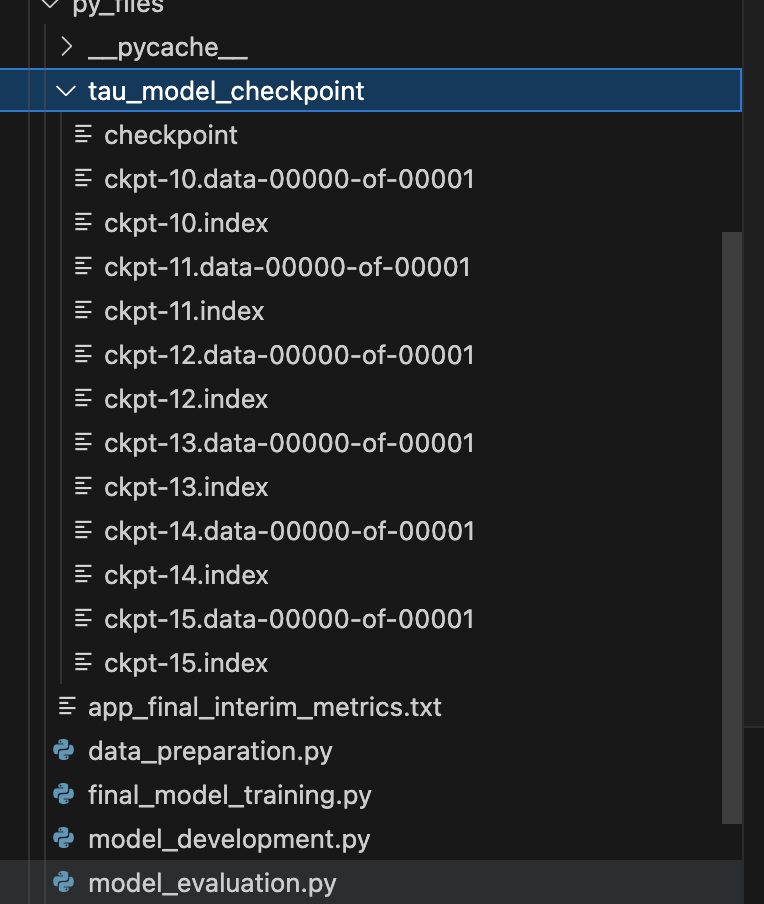

An example of my outputs during training are in the screenshot. Immediately following execution the directory disappears.

The subset of code involved in the model training is included below.

`model = dc.models.GraphConvModel(n_tasks=1, mode='classification',

graph_conv_layers=parameters["graph_conv_layers"],

batchnorm=parameters['batchnorm'],

dropout=parameters['dropout'],

dense_layer_size=parameters['dense_layer_size'],

batchsize=parameters['batch_size'],

max_checkpoints_to_keep=20)

all_metrics = {}

for i in range(1, 11):

model.fit(train, nb_epoch=1)

# every n epochs evaluate the model

if i % 2 == 0:

validation_preds = model.predict(valid)

metrics = model.evaluate(valid, metrics=dc_metrics())

metrics['log_loss'] = log_loss(valid.y, [i[0] for i in validation_preds])

all_metrics = model_evaluation.prediction_metrics(metrics, validation_preds,

valid.y, probability_threshold)

print(f'\nEpoch {i}\n------------------------')

## every 10 epochs save the weights

model.model_dir=f'{base_path}{target}_model_checkpoint'

model.save_checkpoint()`

Thanks in advance!